(Paper) MitoEM dataset: large-scale 3d mitochondria instance segmentation

This tutorial describes how to reproduce the results reported in our paper, concretely

U2D-BC to make instance segmentation of mitochondria in electron microscopy (EM) images:

Wei, Donglai, et al. "Mitoem dataset: Large-scale 3d mitochondria instance segmentation

from em images." International Conference on Medical Image Computing and Computer-Assisted

Intervention. Cham: Springer International Publishing, 2020.

Problem description

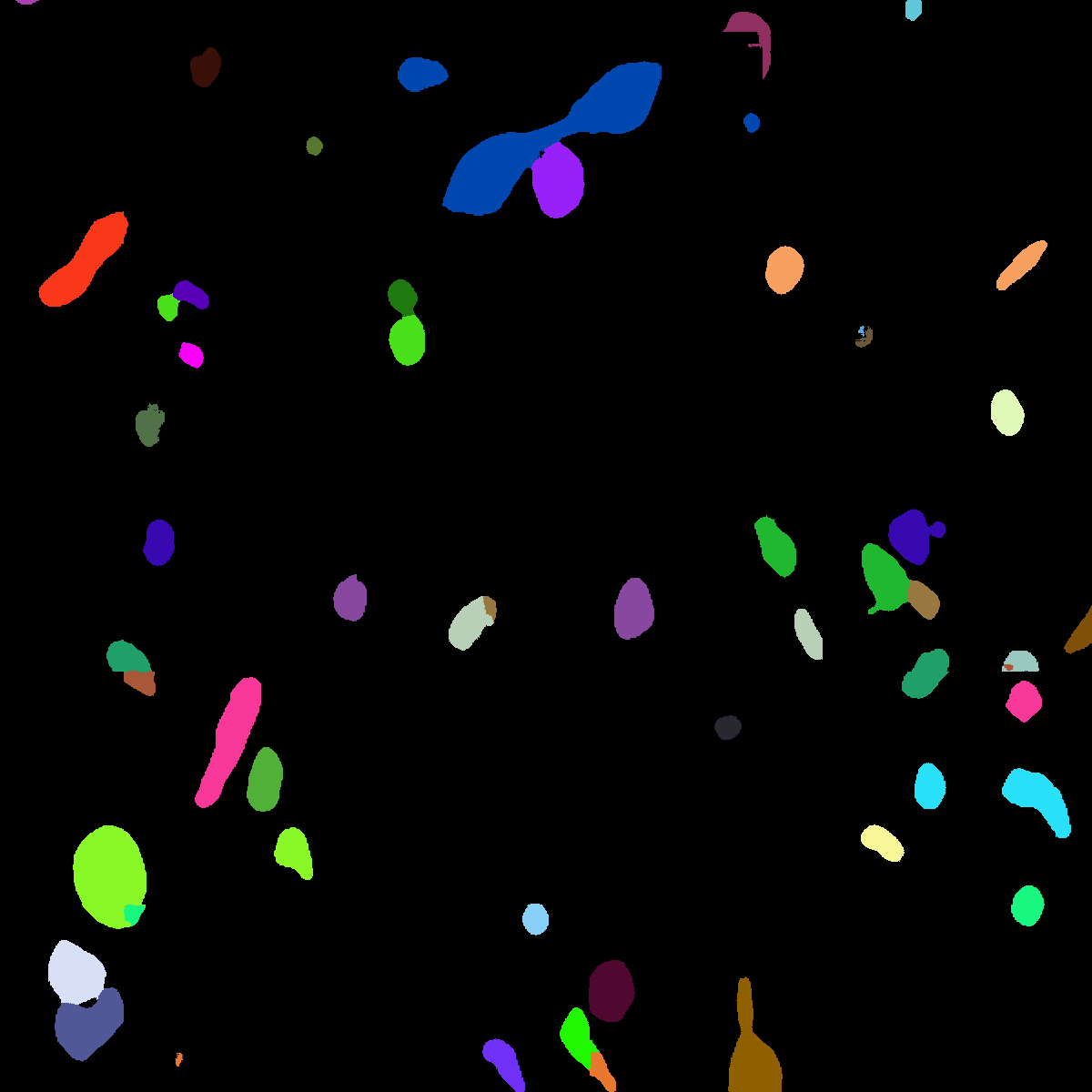

The goal is to segment and identify automatically mitochondria instances in EM images. To solve such task pairs of EM images and their corresponding instance segmentation labels are provided. Below a pair example is depicted:

MitoEM-H tissue image sample. |

Its corresponding instance mask. |

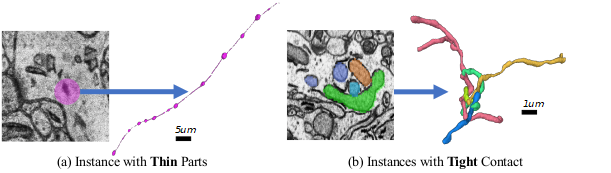

MitoEM dataset is composed by two EM volumes from human and rat cortices, named MitoEM-H and MitoEM-R respectively. Each

volume has a size of (1000,4096,4096) voxels, for (z,x,y) axes. They are divided in (500,4096,4096) for

train, (100,4096,4096) for validation and (500,4096,4096) for test. Both tissues contain multiple instances

entangling with each other with unclear boundaries and complex morphology, e.g., (a) mitochondria-on-a-string (MOAS)

instances are connected by thin microtubules, and (b) multiple instances can entangle with each other.

Data preparation

You need to download MitoEM dataset first:

The EM images should be 1000 on each case while labels only 500 are available. The partition should be as follows:

Training data is composed by

400images, i.e. EM images and labels in[0-399]range. These labels are separated in a folder calledmito-train-v2.Validation data is composed by

100images, i.e. EM images and labels in[400-499]range. These labels are separated in a folder calledmito-val-v2.Test data is composed by

500images, i.e. EM images in[500-999]range.

Once you have donwloaded this data you need to create a directory tree as described in Data preparation.

Configuration file

To reproduce the exact results of our manuscript you need to use mitoem.yaml configuration file.

Then you need to modify DATA.TRAIN.PATH and DATA.TRAIN.GT_PATH with your training data path of EM images and labels respectively. In the same way, do it for the validation data with DATA.VAL.PATH and DATA.VAL.GT_PATH and for the test setting DATA.TEST.PATH.

Run

To run it via command line or Docker you can follow the same steps as decribed in Run.

Results

The results follow same structure as explained in Results. The results should be something like the following:

MitoEM-H train sample’s GT. |

MitoEM-H train prediction. |

MitoEM challenge submission

There is a open challenge for MitoEM dataset: https://mitoem.grand-challenge.org/

To prepare .h5 files from resulting instance predictions in .tif format you can use the script tif_to_h5.py. The instances of both Human and Rat tissue need to be provided

(files must be named as 0_human_instance_seg_pred.h5 and 1_rat_instance_seg_pred.h5 respectively). Find the full

details in the challenge’s evaluation page.